🥋 About me

I am a current Research Assistant at the School of Vehicle and Mobility, Tsinghua University, under the mentorship of Prof. Xinyu Zhang and Prof. Jun Li. I earned my B.Eng in Computer Science and Technology from China University of Mining and Technology (CUMTB) in 2023, where I was guided by Prof. Jiajing Li. Further enhancing my research credentials, I participated in joint training with Tsinghua University from 2023 to 2025.

My research focuses on 3D computer vision and its application to spatial intelligence in autonomous systems, centered on the core challenge of data consistency (or alignment). Previously, my work focused on the foundational challenge of Spatio-Temporal Alignment for the Vehicle-to-Everything (V2X) sensing systems. Recently, I’m actively explore the potential of Large Foundation Model (LFM) and Multi-Modal Large Language Models (MLLM) for this field.

I am actively seeking exploring MPhil/PhD programs to further my research on Large Model technologies and their applications in autonomous systems. Any opportunities or referrals would be greatly appreciated! Please feel free to reach out!

🦄 Research

tl;dr

- 3D computer vision: Registration/Calibration, Perception, SLAM;

- Autonomous Driving: Cooperative Perception, V2X, End-to-End Driving;

- Application of MLLMs & VLA: Scene Understanding, End-to-End Driving, Robot Manipulation.

Overview

My research vision is to enable autonomous systems, such as self-driving vehicles, with robust Spatial Intelligence. Within this broad domain, I focus on the data consistency or the data alignment problem arising from heterogeneous data inputs (e.g., images, point clouds).

I define this “alignment” as the process of mapping heterogeneous data to a common frame of reference, targeting consistency with real-world geometry or semantics. The varying characteristics of different data types (e.g., density, modality) create significant challenges in the accuracy of this mapping. Historically, this problem has evolved from manual methods to analytical, feature-based approaches, and now to data-driven deep learning models. Traditionally, alignment is a distinct upstream prerequisite for downstream data fusion. In this evolution, the boundary between these two tasks is blurring. However, I argue that the importance of alignment is not diminishing; rather, it is evolving from a distinct Explicit task to an Implicit function absorbed by downstream models. This observation leads to my ultimate research goal: to develop a new paradigm for data and feature alignment, moving beyond the current scattered, case-by-case solutions.

The criticality of spatial data consistency suggests the need for its own unified framework. My research aims to build this framework, exploring these issues extensively, and I broadly categorize my contributions into two main areas, which is a classification I proposed in my IoT-J survey: Explicit Alignment and Implicit Alignment.

1. Explicit Alignment

Explicit Alignment refers to classical, often upstream, tasks where alignment is the direct and primary objective. The most typical examples are sensor spatio-temporal calibration and data registration. A early portion of my published work, such as our Camera-LiDARs Calibration research (T-IM 2023), falls into this category. Another major class of explicit alignment is map-based localization, including SLAM, which is fundamentally a geometric matching and alignment task. I have also contributed to this area (T-ASE 2024).

My primary focus is on the new challenges that arise as autonomous systems evolve from single-agent intelligence to multi-agent cooperative systems (e.g., Vehicle-to-Everything in intelligent transportation). My representative publications (IROS 2024, T-ITS 2025) all investigate the unique data consistency problems in these emerging multi-agent scenarios.

2. Implicit Alignment

Implicit Alignment is a concept based on my personal observations of current trends. I have observed that while multi-agent cooperative perception models are affected by data alignment accuracy, many new methods demonstrate robustness to alignment errors. This seems to reduce the pressure on upstream alignment, but I argue that these models are absorbing part of the alignment task, performing compensatory alignment within their intermediate feature layers. This reflects a subtle but significant shift in functional responsibility in the era of end-to-end models. My work under review (CoSTr) explores this path by optimizing alignment at the sparse feature level.

Furthermore, this concept of implicit alignment can be observed at even later stages, which I am currently investigating along three lines:

-

MLLM-based Scene Understanding: I have observed surprising and promising capabilities of large models in handling alignment, as seen in the experiments of my ongoing paper (WAMoE3D). This will be a major focus of my future work.

-

Pose-Free 3D Foundation Model: The development of pose-free 3D foundation models offers new insights into how systems can learn to align data without explicit pose information, which is also what I’m currently investigating.

-

End-to-End Driving: I am exploring how tasks further downstream, such as end-to-end driving or planning (UniMM-V2X), react to the quality of upstream data alignment, which helps quantify the impact of both explicit and implicit alignment on final system performance.

🔥 News

- 2025.08: 🎉🎉 Our paper “V2X-Reg++: A Real-time Global Registration Method for Multi-End Sensing System in Urban Intersections” has been accepted by IEEE T-ITS (JCR Q1, IF:8.4).

- 2025.05: 🎉🎉 Our survey paper “Cooperative Visual-LiDAR Extrinsic Calibration Technology for Intersection Vehicle-Infrastructure: A review” was accepted by IEEE IoT-J (JCR Q1, IF:8.9).

- 2024.08: 🎉🎉 Our paper “GF-SLAM: A Novel Hybrid Localization Method Incorporating Global and Arc Features” was accepted by IEEE T-ASE (JCR Q1, IF=5.9).

- 2024.06: 🎉🎉 Our paper “V2I-Calib: A Novel Calibration Approach for Collaborative Vehicle and Infrastructure LiDAR Systems” was orally presented at IROS 2024(flagship conferences in Robotics).

- 2023.11: 🎉🎉 Our paper “Automated Extrinsic Calibration of Multi-Cameras and LiDAR” has been accepted by IEEE T-IM (JCR Q1, IF:6.4).

📝 Publications

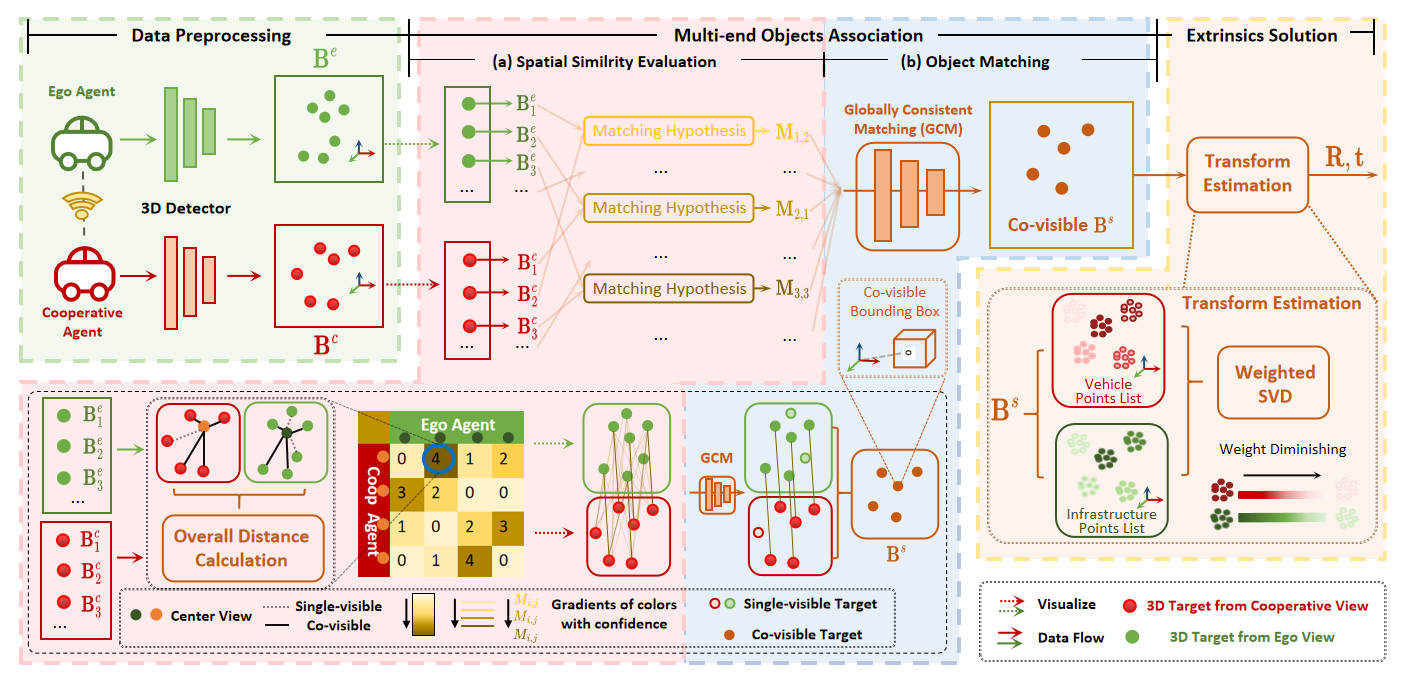

V2X-Reg++: A Real-time Global Registration Method for Multi-End Sensing System in Urban Intersections

Accepted by IEEE Transactions on Intelligent Transportation Systems (T-ITS, JCR Q1, IF:8.4)

tl;dr: We argue that current spatial alignment methods, which require an initial pose, are impractical for real-world Vehicle-to-Everything (V2X) cooperative perception. To address this limitation, we propose an online global registration algorithm that uses perception priors to align heterogeneous sensors in real-time.

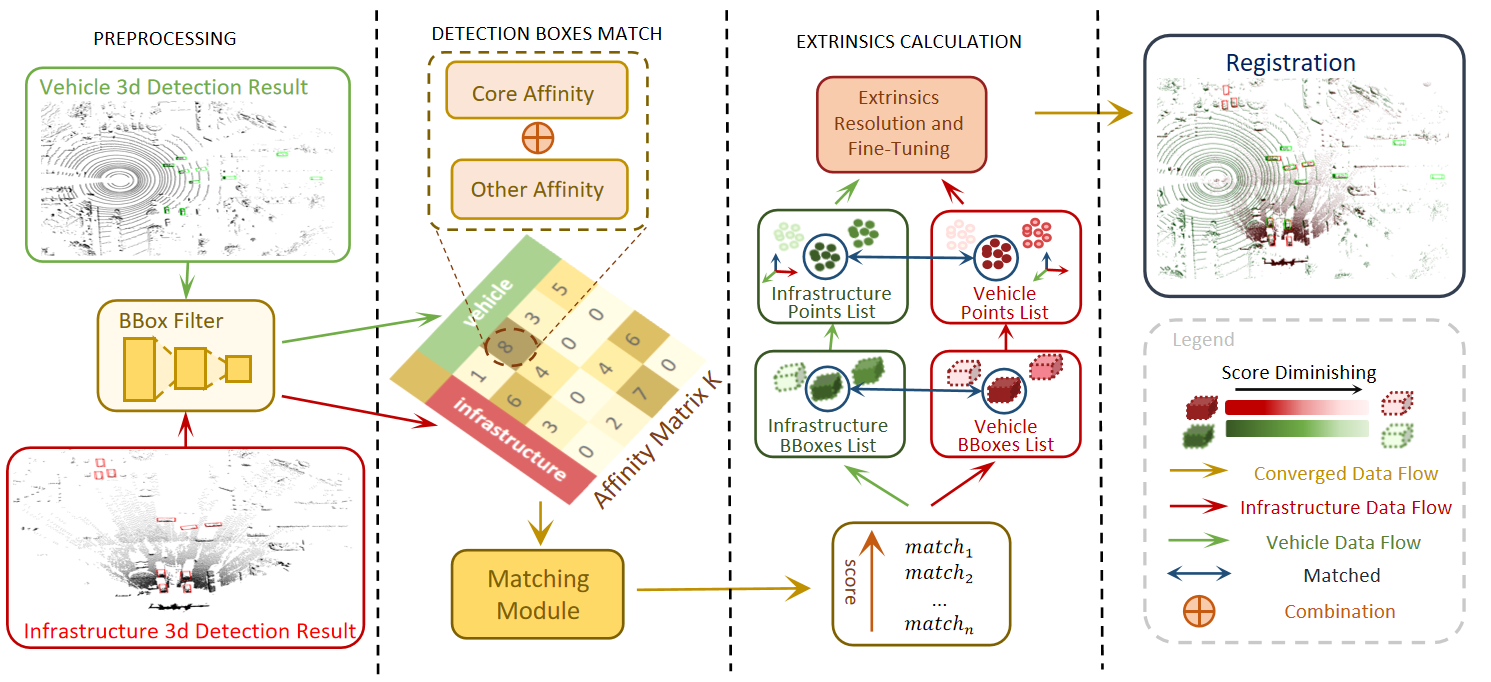

V2I-Calib: A Novel Calibration Approach for Collaborative Vehicle and Infrastructure LiDAR Systems

IEEE/RSJ International Conference on Intelligent Robots and Systems(IROS), 2024

tl;dr: We re-examine the evolution of sensor calibration in V2I scenarios, highlighting the shift in demand from static, one-time calibration to dynamic, continuous alignment. We then propose an online, global registration of cross-source point cloud for algorithm for V2I.

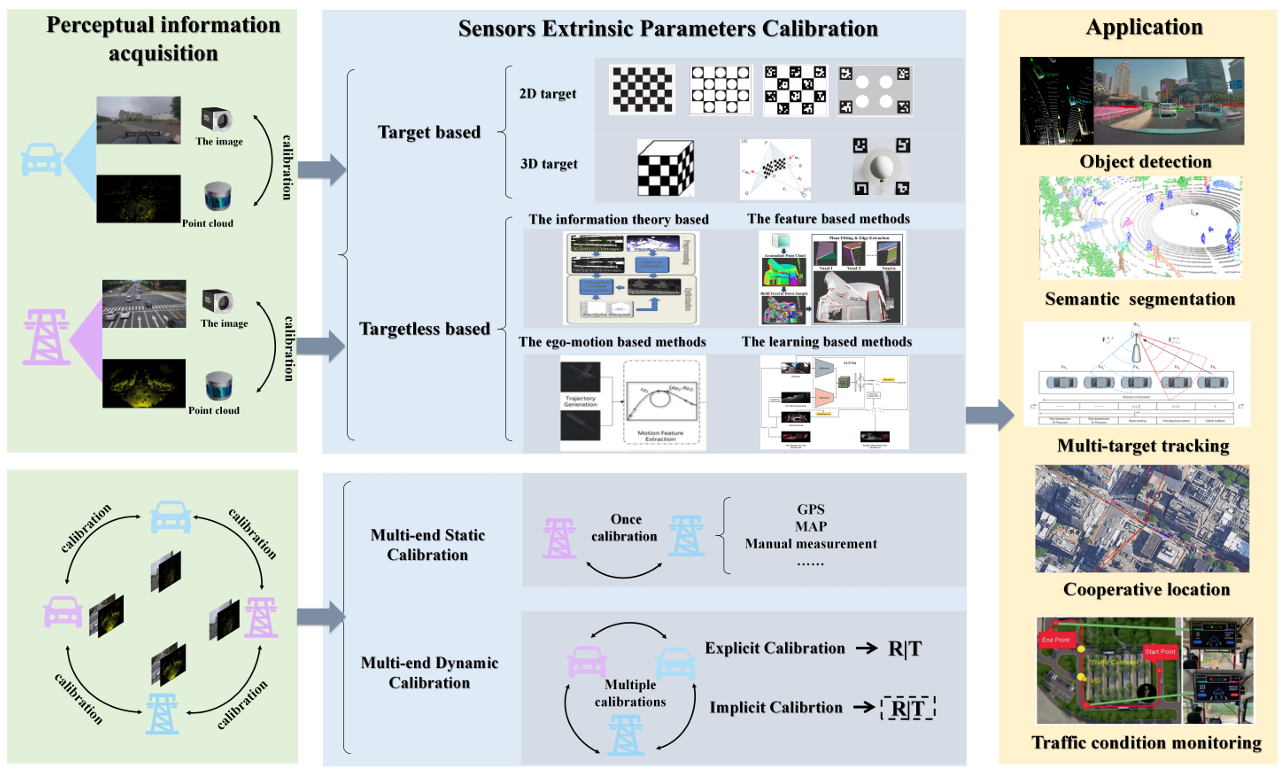

Cooperative Visual-LiDAR Extrinsic Calibration Technology for Intersection Vehicle-Infrastructure: A review

IEEE Internet of Things Journal, 2025 (IoT-J, JCR Q1, IF:8.9, Student First Author)

tl;dr: This survey systematically organizes the evolution of sensor calibration from single-vehicle to cooperative intelligence.

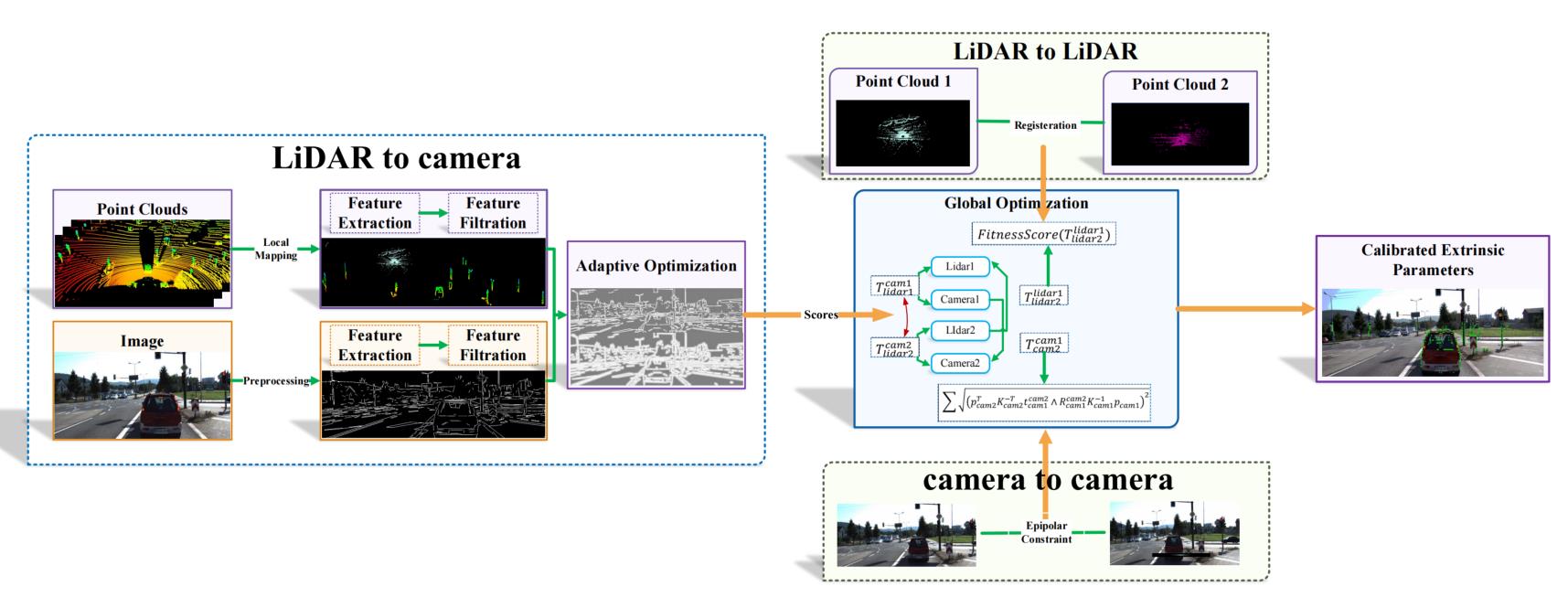

Automated Extrinsic Calibration of Multi-Cameras and LiDAR

IEEE Transactions on Instrumentation and Measurement, 2023 (T-IM, JCR Q1, IF:5.9, Student First Author)

tl;dr: We propose an online, line-feature-based method to address extrinsic parameter drift in Camera-LiDAR systems during operation. Its real-world effectiveness was validated with industry partners (Meituan, MOGOX, and SAIC Motor).

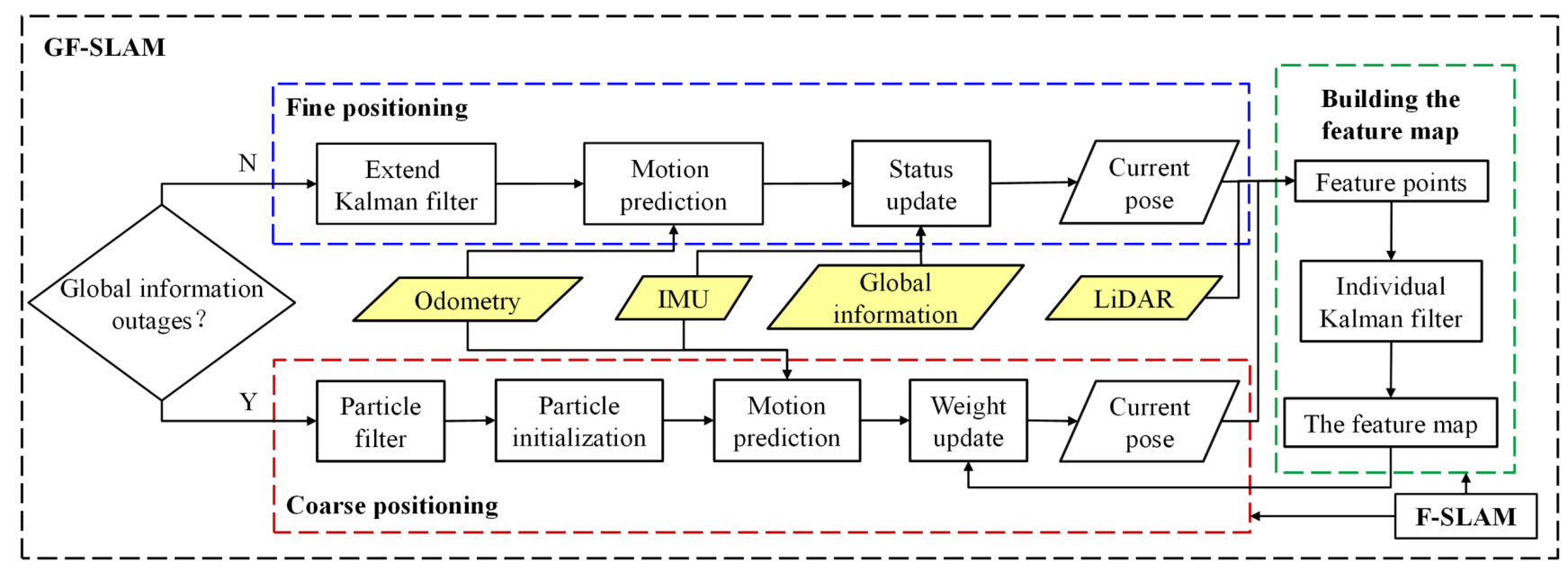

GF-SLAM: A Novel Hybrid Localization Method Incorporating Global and Arc Features

IEEE Transactions on Automation Science and Engineering, 2024(T-ASE, JCR Q1, IF=6.4)

tl;dr: To address cumulative error in mapping for agricultural scenarios, we propose a robot localization method that fuses global and local environmental features. I was responsible for liaising with the Academy of Agricultural Sciences and implementing the real-world validation.

Under Review

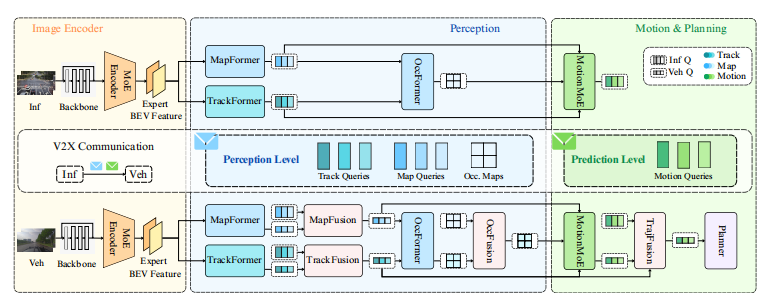

UniMM-V2X: MoE-Enhanced Multi-Level Fusion for End-to-End Cooperative Autonomous Driving

tl;dr: We argue that current cooperative driving methods, which only fuse at the perception level, fail to align with downstream planning and can even degrade performance. To address this limitation, we propose UniMM-V2X, an end-to-end framework that introduces multi-level fusion (cooperating at both perception and prediction levels) enhanced with Mixture-of-Experts (MoE).

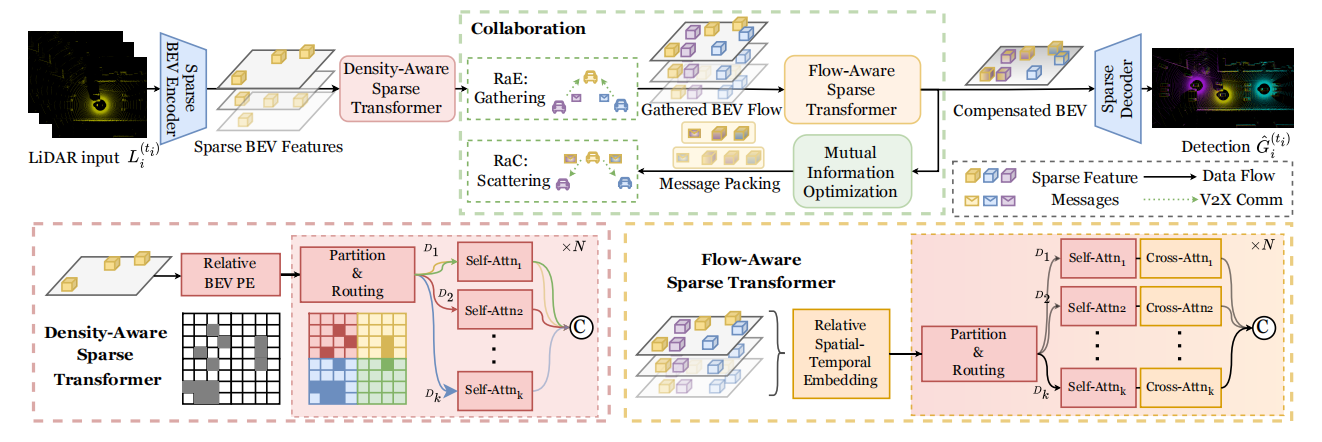

CoSTr: a Fully Sparse Transformer with Mutual Information for Pragmatic Collaborative Perception

tl;dr: Current cooperative perception suffers from redundant dense BEV features, insufficient sparse receptive fields, and poor spatio-temporal robustness. We propose a sparse Transformer, combining mutual information and flow-awareness, to achieve a fully sparse-feature pipeline for cooperative perception.

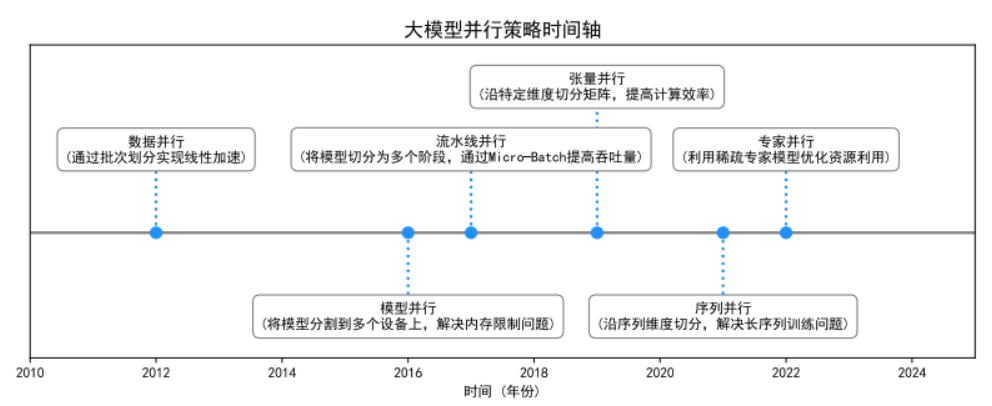

A Survey on Hybrid Parallelism Techniques for Large Model Training

tl;dr: As traditional parallelism fails for massive Transformers, this survey reviews hybrid strategies (DP, TP, PP, SP, EP). We introduce a unified framework based on operator partitioning to analyze these methods and the evolution of automatic parallelism search.

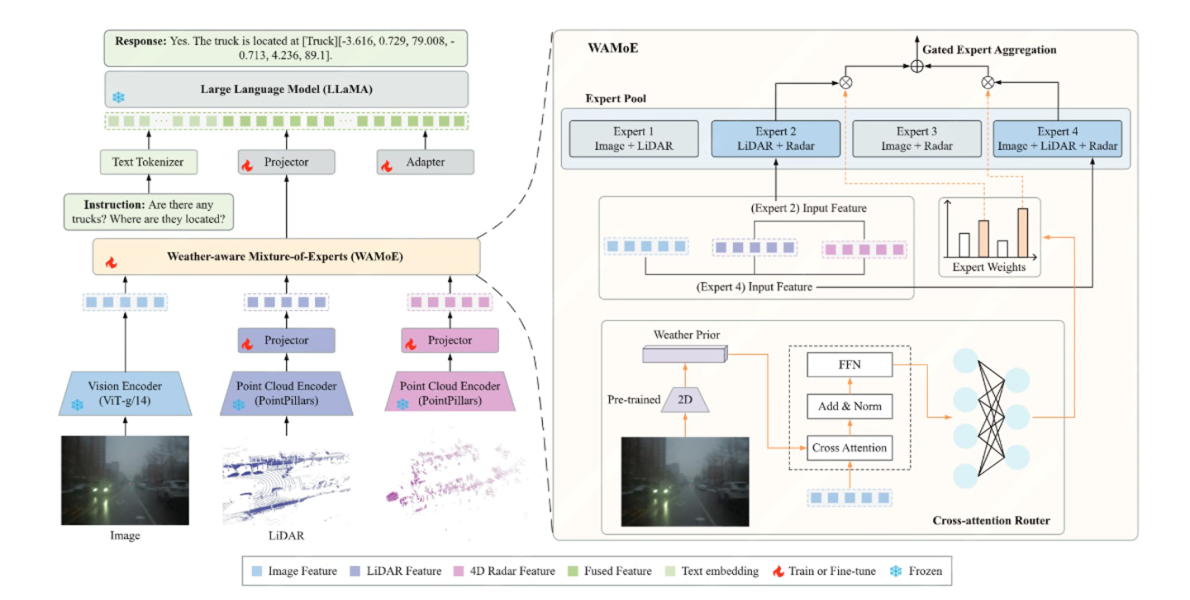

WAMoE3D: Weather-aware Mixture-of-Experts for MLLM-based 3D Scene Understanding in Autonomous Driving

tl;dr: To address the sharp performance drop of MLLMs in adverse weather, we built a VQA dataset and benchmark for traffic scene understanding based on the Dual-Radar dataset. We then proposed an adaptive fusion framework for the LLaMA architecture, utilizing a Weather-aware Mixture-of-Experts (WAMoE) module to dynamically fuse camera, LiDAR, and radar features, coupled with LoRA-based fine-tuning to enhance perception and reasoning capabilities in adverse weather.